I often make reference to the Agile Testing Quadrants model to help explain different modes of testing, and how automation is just a part of a larger domain of testing. Recently, I discovered that Michael Bolton has evolved this model into something much more comprehensive and useful, particularly in response to challenges many testers face in Agile environments.

Update: Bolton’s presentation on this topic was later re-presented and recorded at EuroSTAR 2015, where you can see his full explanation of how traditional testing models can be enhanced to better serve modern development contexts.

The Traditional Agile Testing Quadrants

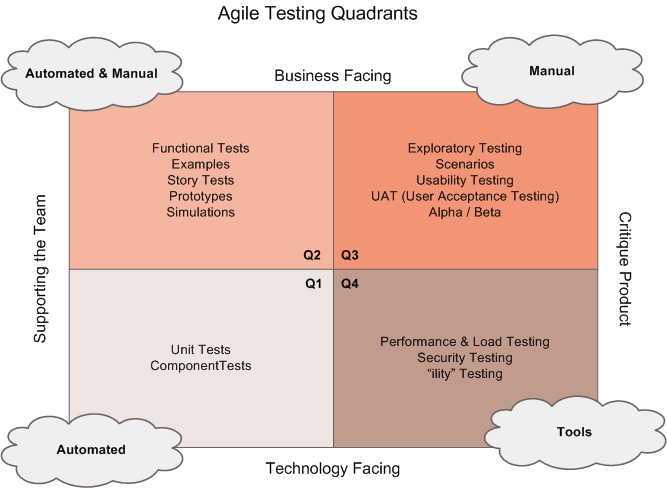

The original Agile Testing Quadrants, popularized by Lisa Crispin and Janet Gregory, provided a useful framework for thinking about different types of testing:

Quadrant 1 (Q1): Automated - Technology-Facing, Team Support

- Unit tests, component tests

- Purpose: Guide development and prevent defects

- Automation: Highly automated, fast feedback

- Owner: Primarily developers

Quadrant 2 (Q2): Automated & Manual - Business-Facing, Team Support

- Functional tests, examples, story tests, prototypes, simulations

- Purpose: Validate business requirements and behavior

- Automation: Mix of automated and manual testing

- Owner: Collaboration between testers, developers, and business

Quadrant 3 (Q3): Manual - Business-Facing, Product Critique

- Exploratory testing, scenarios, usability testing, UAT (User Acceptance Testing), alpha/beta testing

- Purpose: Discover unknown issues and validate user experience

- Automation: Primarily manual, human-centered

- Owner: Testers with business stakeholder input

Quadrant 4 (Q4): Tools - Technology-Facing, Product Critique

- Performance & load testing, security testing, “-ility” testing (e.g., scalability, reliability)

- Purpose: Evaluate technical qualities and constraints

- Automation: Specialized tools and automated analysis

- Owner: Specialists and technically-focused testers

This model helped teams understand that testing isn’t just about finding bugs, but also about supporting the development process and ensuring the product meets business needs.

Bolton’s Evolution

Michael Bolton has taken this foundational concept and fundamentally reconsidered it, moving toward a more nuanced view of the testing ecosystem. His approach, developed with James Bach as part of their Rapid Software Testing methodology, recognizes that:

- Testing is not just about quadrants - it’s about understanding the full ecosystem of activities that contribute to product quality

- Context matters - what works in one situation may not work in another

- Human judgment is irreplaceable - automation supports testing but doesn’t replace the need for skilled testers

The New Ecosystem Thinking

Bolton’s evolved model emphasizes:

Exploration and Investigation

Rather than just executing predefined tests, testers need to actively explore the product, investigating how it behaves under different conditions and scenarios.

Risk-Based Approach

Testing activities should be prioritized based on risk, not just coverage metrics or automation percentages.

Collaborative Discovery

Testing is most effective when it’s integrated into the entire development process, not treated as a separate phase or activity.

Practical Implications

This ecosystem thinking has several practical implications for how we approach testing:

- Tool Selection: Choose tools that support investigation and exploration, not just execution

- Skill Development: Invest in developing critical thinking and investigation skills

- Process Integration: Embed testing activities throughout the development lifecycle

- Measurement: Focus on meaningful metrics that reflect actual risk reduction

Moving Forward

As the testing field continues to evolve, frameworks like Bolton’s ecosystem model help us think more holistically about quality and testing. Rather than getting caught up in debates about automation vs. manual testing, we can focus on building systems that effectively manage risk and deliver value.

The key is to remember that testing is fundamentally about learning and discovery - identifying and defending product value while managing development costs effectively. Any model or framework we use should support and enhance that core purpose, not constrain it.